Integrating Ml5.js PoseNet model with Three.js

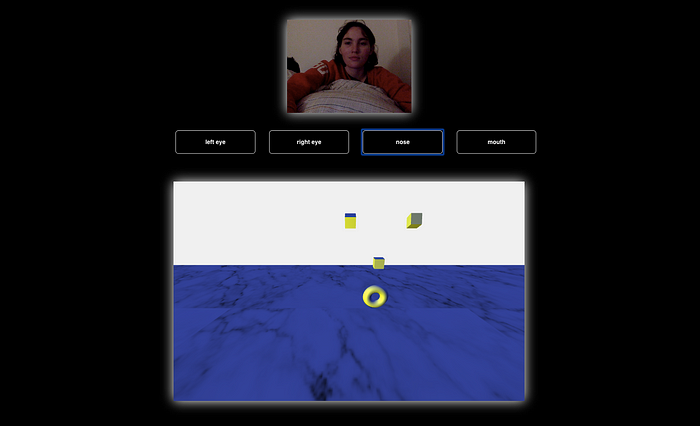

In this blog I will explain how one can integrate the Ml5.js library with Three.js to create an interactive app that will allow the user to use their face to move rendered 3D objects.

In its simplest form Three.js allows you to create a scene with a background and shapes positioned on an XYZ coordinate system. The Three.js documentation and many blogs out there do a great job of getting one started with this.

With some simple shapes in a scene you can then animate them by creating a function that calls “requestAnimationFrame” within it and renders your renderer. Below is a quick example taken from the Three.js docs:

const animate = function () {

requestAnimationFrame( animate );

cube.rotation.x += 0.01;

cube.rotation.y += 0.01;

renderer.render( scene, camera );

};Writing this function allows your animation to be continuous. Furthermore, this is where you can leverage the power of Ml5.js. Before I hit the ground running… lets talk a little a bit about Ml5.js and PoseNet.

What is Ml5.js?

Ml5.js is described as friendly machine learning tool built on top of tensorflow.js.

A very basic explanation of what machine learning is: teaching computers to identify certain patterns.

I discovered Ml5.js while watching The Coding Train’s introductory video to this very library. The neat thing about Ml5.js, is that it has the a few models integrated into it, one of which is PoseNet.

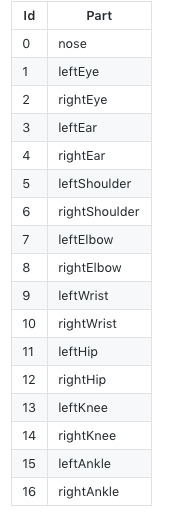

The PoseNet model allows your computer to identify body positions through an image or video feed. It outputs x and y coordinates for seventeen identifiable points. The image to the left shows you exactly which points those are.

Understanding how the outputted data is structured is important to leveraging the power of this model.

With every pose detected an object is outputted with the following information: a confidence score of the pose, and an array of key-points. The key-points are objects themselves. Each key-point has three keys: “position”, “part” and “score”.

- The “position” is also an object containing two keys: x and y which are number values corresponding with the x and y coordinates of the image or video.

- The “part” is a string of a given part of the body

- and finally the “score” is a confidence score of that particular point

aPose = {

"score": 0.32371445304906,

"keypoints": [

{

"position": {

"y": 76.291801452637,

"x": 253.36747741699

},

"part": "nose",

"score": 0.99539834260941

},

{

"position": {

"y": 71.10383605957,

"x": 253.54365539551

},

"part": "leftEye",

"score": 0.98781454563141

} ]

}Knowing this we can now start to think about how to use the x and y coordinates of a specific part of the body to translate over to the x and y positions of our 3D object.

First we want to set up our video feed by creating a video element and appending it to an already made div with the id “video”. I set the video to be a square since that is recommended by the PoseNet documentation.

const video = document.createElement('video')const vidDiv = document.getElementById('video') video.setAttribute('width', 255);video.setAttribute('height', 255);video.autoplay = truevidDiv.appendChild(video)

Then we want to initialize the video feed.

navigator.mediaDevices.getUserMedia({ video: true, audio: false }) .then(function(stream) { video.srcObject = stream;}) .catch(function(err) { console.log("An error occurred! " + err);});

Connect the video feed to the Ml5 PoseNet model like so, making sure that you options flip your video horizontally so that your users movements are correctly determined.

const options = { flipHorizontal: true, minConfidence: 0.5}const poseNet = ml5.poseNet(video, options, modelReady)

Next we will define an event listener that will call two functions within it:

- “loopThroughPoses”

- the Three.js renderer.

This event listener will serve the same purpose the “animate” function, mentioned earlier, would. It will re-render our 3D image every time a new pose is made by the user!

let nose = {}

// important to initialize an empty object which will //

// store the x and y coordinates of your target body part //

// in this case it is the nose! //poseNet.on('pose', function(results) { let poses = results; loopThroughPoses(poses, nose) let estimatedNose = { x: nose.x, y: nose.y } if (estimatedNose.x && estimatedNose.y){ render(estimatedNose, cube01, cube02, cube03) }});

“loopThroughPoses” will loop through the outputted data for each pose, and extract the desired data. My “loopThroughPoses” function was taken form the PoseNet docs and changed slightly to target specifically the nose!

function loopThroughPoses (poses, nose){ for (let i = 0; i < poses.length; i++) { let pose = poses[i].pose; for (let j = 0; j < pose.keypoints.length; j++) { let keypoint = pose.keypoints[j]; if (keypoint.score > 0.2 && keypoint.part === 'nose' ) { nose.x = keypoint.position.x nose.y = keypoint.position.y } } }}

The Three.js renderer should take an object that has stored a target body part’s x and y positions and a 3D object you wish to move. Then calculate the difference of the x and y positions of that particular part of the body compared to the previous position (which needs to be initialized to a value and then from then on stored to its variable). This difference should then be multiplied by a decimal (I used 0.20) to minimize the value and translate it over to Three.js appropriate coordinate numbers. Then add this processed number to the shapes x and y coordinates. Here is an example:

let lastXPosition = 100;

let lastYPosition = 100;let changeX = 1;

let changeY = 1;// remember that nose is just an empty object like so {} //const render = function (nose, cube ) {

changeX = nose.x - lastXPosition

changeY = nose.y - lastYPosition cube.position.x += (changeX * 0.20)

cube.position.y += -(changeY * 0.20) renderer.render(scene, camera);

}

Processing the value that represents the change in each pose allows you to easily translate from one coordinate system (the video feed) to the Three.js render window coordinate system. You can now experiment with minimizing the changeX and changeY value in different ways to see what outputs you may get! You can also add more shapes and perhaps even more interactivity and target points.

Feel free to explore my github repo of this project!